Are Your Teams AI-Ready? 7 Critical Data Quality Mistakes That Kill Enterprise Transformation (And How to Fix Them)

- VCM Management

- Dec 30, 2025

- 6 min read

You're sitting in another quarterly review meeting, watching your latest AI initiative produce results that make absolutely no sense. Your data scientists are scratching their heads, your CFO is questioning the investment, and you're wondering why your "intelligent" systems seem anything but intelligent.

Here's the uncomfortable truth: 82% of enterprise AI projects fail not because of the technology, but because of the data feeding into them. Your teams aren't AI-ready because your data isn't transformation-ready. And while everyone's focused on the latest algorithms and platforms, the real killer is lurking in your data quality fundamentals.

The irony? You're probably making the same seven mistakes that every other business leader makes when preparing for AI transformation. The difference is knowing what they are and how to fix them before they torpedo your next initiative.

The Hidden Cost of Dirty Data in AI Transformation

Before we dive into the specific mistakes, let's talk money. Poor data quality costs organizations an average of $12.9 million annually. But when you're talking AI transformation, multiply that figure. Machine learning models trained on dirty data don't just produce bad results: they produce confidently wrong results that can mislead your entire organization.

Your AI systems are only as good as the data you feed them. Garbage in, garbage out isn't just a programming cliché: it's the reason why your predictive analytics are predicting the past and your automation is automating chaos.

Mistake #1: Tolerating Human Error in Data Entry

The Problem: You've built sophisticated AI models, but Sally from accounting is still typing customer names with random capitalization and Bob from sales is entering phone numbers in seventeen different formats. Human error remains the single largest contributor to data quality degradation, corrupting your datasets before they even reach your AI systems.

Every typo, every copy-paste error, every misinterpreted field becomes training data for your machine learning models. Your AI learns from these mistakes, baking human errors into automated decisions that scale across your entire operation.

The Fix: Stop treating data entry like a necessary evil and start treating it like the foundation of your AI strategy. Implement automated validation rules that catch errors at the point of entry. Create drop-down menus instead of free-text fields wherever possible. Establish clear data entry guidelines and: here's the kicker: actually train your teams on them.

Consider implementing data literacy workshops across your organization. When everyone understands how their input affects downstream AI processes, data quality becomes everyone's responsibility, not just IT's problem.

Mistake #2: Accepting Duplicate Data Across Systems

The Problem: Your CRM has one version of a customer, your ERP has another, and your marketing automation platform has three more. Duplicate data doesn't just create confusion: it actively undermines AI training by presenting the same entity as multiple distinct data points.

This distorts your machine learning models, skews your analytics, and causes your AI systems to treat one customer as five different people. The result? Personalization algorithms that can't personalize, recommendation engines that recommend poorly, and predictive models that predict incorrectly.

The Fix: Deploy data merging tools like Talend or implement master data management (MDM) solutions that create a single source of truth for each entity. But here's what most organizations miss: prevention is cheaper than cure. Establish clear data entry protocols that prevent duplicates from being created in the first place.

Create unique identifier standards across all systems and implement real-time duplicate checking during data entry. Your AI transformation depends on clean, unified datasets: not multiple versions of the same information competing for algorithmic attention.

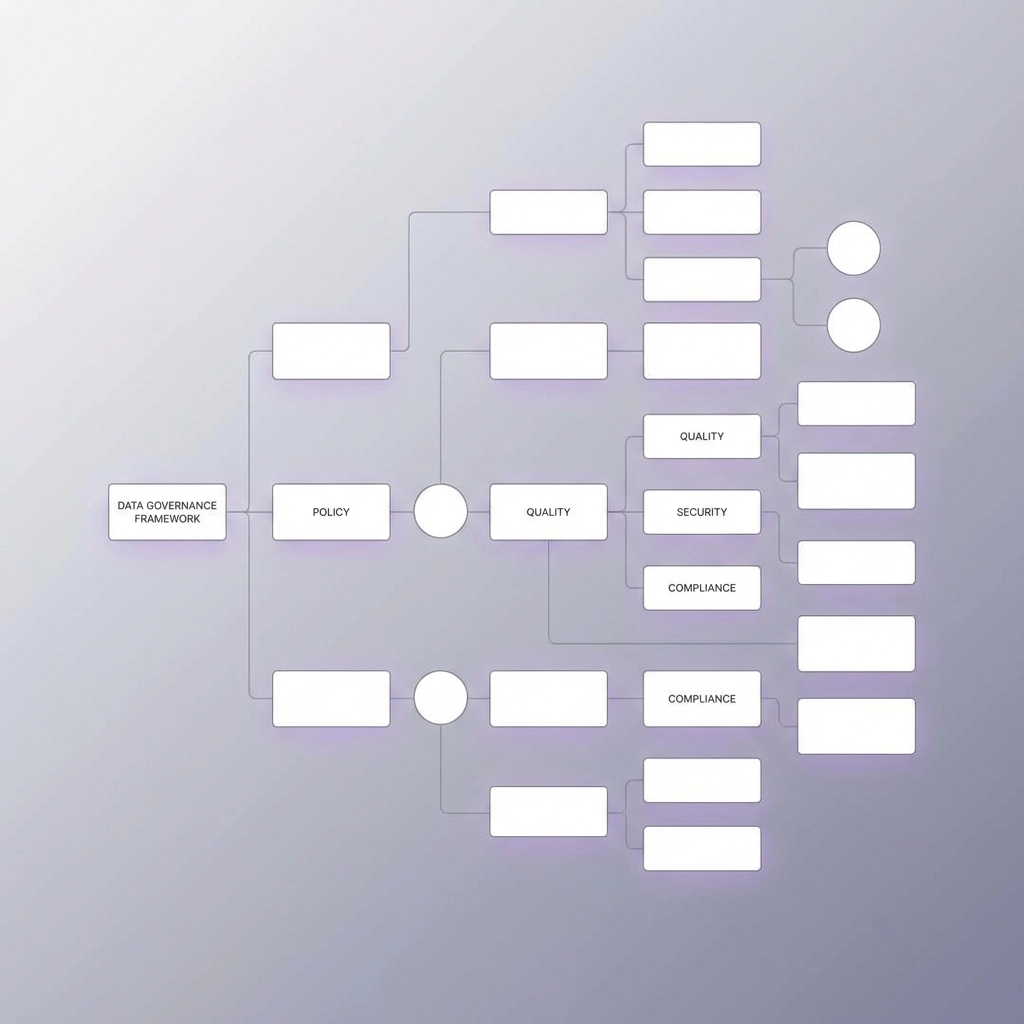

Mistake #3: Operating Without Data Governance

The Problem: Nobody owns your data, so everybody owns your data, which means nobody really owns your data. Without clear governance policies, different departments follow different rules (or no rules at all), creating inconsistent definitions of what constitutes "clean" data.

Your sales team considers a lead "qualified" based on one set of criteria, while your marketing team uses completely different standards. When AI systems try to learn from this inconsistently defined data, they can't identify meaningful patterns because the patterns themselves are inconsistent.

The Fix: Assign specific data ownership to individuals or teams for different data types. Implement a RACI model (Responsible, Accountable, Consulted, Informed) that clearly defines who's responsible for data quality in each domain.

Develop a centralized business glossary using platforms like Collibra or Alation. When everyone uses the same definitions for the same concepts, your AI systems can learn consistent patterns that actually reflect business reality rather than departmental interpretation.

Mistake #4: Ignoring Format Inconsistencies

The Problem: Your data exists in a Tower of Babel where dates appear as MM/DD/YYYY in one system and DD-MM-YY in another, phone numbers sometimes include country codes and sometimes don't, and currency amounts randomly switch between symbols and abbreviations.

These format inconsistencies break AI algorithms that expect structured, consistent input. Your machine learning models can't identify patterns when the same information appears in dozens of different formats across your data landscape.

The Fix: Define format rules organization-wide and enforce them religiously. Establish standard formats for dates, phone numbers, addresses, currency amounts, and any other data that appears across multiple systems.

Implement data transformation pipelines that automatically standardize formats as information moves between systems. Create validation rules that reject non-standard formats at the point of entry. Your AI systems need predictable data structures to identify meaningful patterns.

Mistake #5: Living with Data Silos

The Problem: Your systems don't talk to each other, so your data lives in isolated islands that never connect. Sales data stays in Salesforce, financial data remains in your ERP, and marketing data never leaves HubSpot. When AI systems can't access comprehensive datasets, they make decisions based on incomplete information.

This fragmentation prevents AI models from identifying cross-functional patterns and relationships that could drive significant business value. Your predictive analytics can't predict customer churn if they can't see the full customer lifecycle across all touchpoints.

The Fix: Implement data integration platforms like Fivetran or MuleSoft that create automated connections between disparate systems. But integration isn't enough: you need to establish which system serves as the "source of truth" for specific data elements.

Create data standardization processes that reconcile differences as information moves between systems. Design your integration architecture to support real-time data sharing rather than batch updates that leave your AI systems working with stale information.

Mistake #6: Accepting Incomplete Data as Normal

The Problem: Your teams have become comfortable working with partial datasets, filling in gaps with assumptions and best guesses. But AI systems don't make educated guesses: they identify patterns in available data and extrapolate from there. When significant portions of your data are missing, AI models learn from incomplete patterns that don't reflect business reality.

Missing data creates blind spots in your AI systems that can lead to biased outcomes, failed predictions, and automated decisions that overlook critical factors.

The Fix: Deploy data cataloging tools to create a comprehensive inventory of your organizational data assets and identify gaps systematically. Implement continuous monitoring that tracks completeness metrics and alerts you when critical data elements are missing.

Establish data collection protocols that prioritize completeness from the source rather than attempting to fill gaps downstream. Design your data architecture to capture comprehensive information at each customer touchpoint, transaction, and business process.

Mistake #7: Relying on Batch Updates and Manual Processes

The Problem: Your data refresh happens overnight, your reports update weekly, and your manual synchronization processes mean your AI systems are always working with yesterday's information. In a real-time business environment, stale data leads to outdated decisions and missed opportunities.

AI systems performing real-time personalization, dynamic pricing, or predictive maintenance need current information to function effectively. When your data is hours or days behind reality, your AI recommendations become increasingly irrelevant.

The Fix: Implement real-time data streaming using platforms like Apache Kafka or AWS Kinesis that update your AI systems as business events occur. Deploy schema monitoring tools like Monte Carlo or Databand that track changes in data structure before they break downstream processes.

Establish continuous data quality monitoring that catches issues immediately rather than discovering them days later. Design your data architecture for real-time operations rather than batch processing, enabling your AI systems to respond to business conditions as they actually exist.

Making Your Teams AI-Ready: The Path Forward

Here's what separates AI-ready organizations from those still struggling with transformation: they treat data quality as a strategic capability, not a technical requirement. Every process, every system, and every team member contributes to the data foundation that enables intelligent automation.

Your AI transformation success depends on fixing these seven mistakes before you deploy another algorithm or purchase another platform. Clean, consistent, complete, and current data isn't just a nice-to-have: it's the foundation that determines whether your AI initiatives drive business value or drain business resources.

The organizations winning with AI aren't necessarily the ones with the most sophisticated algorithms. They're the ones with the most sophisticated approach to data quality. Start there, and your teams will be AI-ready before your competitors even understand what that means.

Ready to audit your data quality and accelerate your AI transformation? Contact Value Chain Management to discover how we help organizations build the data foundation that enables intelligent automation and sustainable competitive advantage.

Comments